The enterprise AI conversation is stuck in the wrong decade.

Most boardrooms still frame artificial intelligence as a productivity enhancer. A better search engine. A faster summarizer. A chatbot that reduces call center headcount by 15%. These are real outcomes, but they represent the floor of what is possible, not the ceiling.

The 2025 to 2027 horizon defines a fundamentally different trajectory. The enterprise is not becoming AI-assisted. It is becoming AI-operated. The destination is a self-orchestrating network of autonomous agents that reason, plan, and execute complex business processes with minimal human oversight.

This is the shift from generative dialogue to autonomous action. Organizations that fail to architect for it will find themselves locked into an expensive layer of chatbots while competitors build systems that actually run the business.

The endgame of enterprise AI is not a better chatbot. It is an autonomous operating system for the business itself.

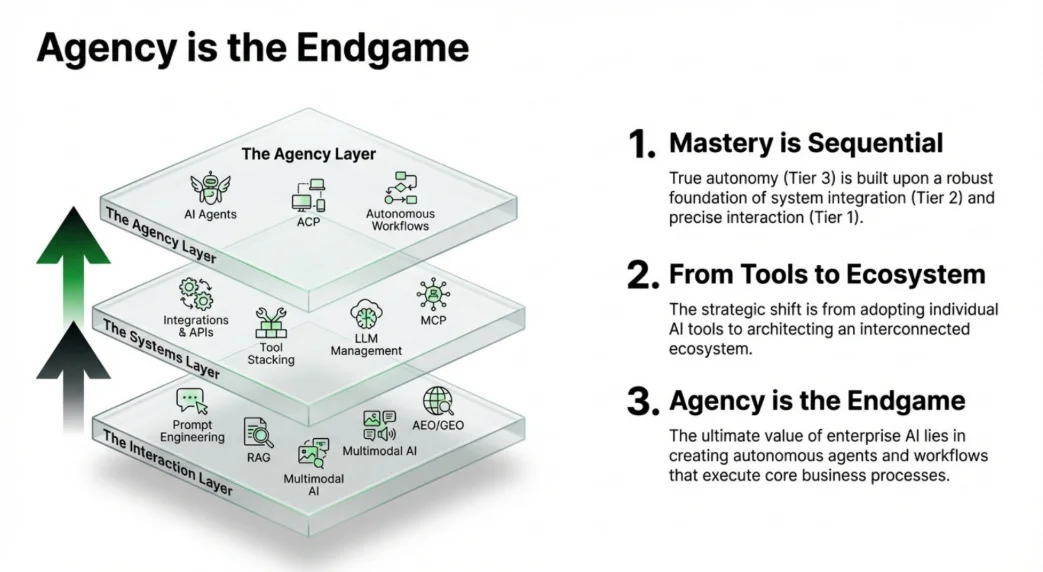

The Agentic Value Chain: Three Tiers to Autonomy

The path from "talking to AI" to "AI running the business" is not a single leap. It is a three-tier architectural progression, and each tier builds on the one below it. Skip a tier and the entire structure collapses.

Tier 1: The Interaction Layer. High-fidelity, grounded inputs. This is where most enterprises sit today. Prompt engineering, retrieval-augmented generation (RAG), and multimodal AI form the foundation. The shift at this tier is subtle but critical: moving from "talking to AI" to providing goal-oriented strategic context that agents can act on.

Tier 2: The Systems Layer. Interconnected infrastructure. The move from siloed tools to a unified backbone of data and tool access. This is where Model Context Protocol (MCP), tool stacking, and LLM management become the connective tissue of the enterprise.

Tier 3: The Agency Layer. Autonomous execution. The final tier, where AI shifts from a passive resource to an active operator. Agent-to-agent communication protocols, autonomous workflows, and peer-to-peer collaboration replace human-led tasking entirely.

The critical insight is that mastery is sequential. True autonomy at Tier 3 requires a robust foundation of system integration at Tier 2 and precise interaction at Tier 1. Organizations that rush to deploy autonomous agents on top of fragmented tool ecosystems will produce expensive failures.

The strategic shift is not adopting individual AI tools. It is architecting an interconnected ecosystem where agency becomes the natural output.

Tier 1: Refining the Foundation

The Interaction Layer is deceptively simple. Most organizations believe they have solved it because they have deployed a chatbot or fine-tuned a few prompts. They have not.

Strategic success in agentic systems depends entirely on input quality. An agent is only as capable as the context it receives. Three technologies define this tier.

Prompt Engineering is no longer about clever phrasing. It is about structured context delivery. The prompts that drive autonomous agents are not conversational. They are architectural specifications that define goals, constraints, available tools, and success criteria. Organizations that treat prompt engineering as a creative writing exercise will produce agents that hallucinate at scale.

Retrieval-Augmented Generation (RAG) is the mandatory primitive for grounding agent responses in proprietary enterprise data. Without RAG, every agent output is a probabilistic guess drawn from public training data. With it, agents can reason over internal documents, customer records, and operational databases with factual precision. The quality of the retrieval pipeline directly determines the quality of autonomous decision-making.

Multimodal AI extends agent perception beyond text. In high-stakes sectors like energy, manufacturing, and logistics, agents must process visual data, voice inputs, and sensor readings. A supply chain agent that can only read spreadsheets is fundamentally limited. One that can interpret satellite imagery, warehouse footage, and IoT sensor data operates at a different level of capability.

The Disintermediation of Search

A parallel revolution is unfolding at the Interaction Layer that most enterprises have not yet recognized. Search engines are transforming into answer engines. The implications for brand visibility are existential.

Answer Engine Optimization (AEO) requires curating authoritative, structured data that platforms like Perplexity and ChatGPT Search can ingest as high-confidence facts. This is not traditional SEO. It is data architecture designed for machine consumption.

Generative Engine Optimization (GEO) takes this further. Organizations must increase the "attribute density" within their digital content to ensure that language models favor their brand metadata during inference. The companies that master GEO will appear in AI-generated recommendations. Those that ignore it will achieve total invisibility as autonomous agents bypass traditional web surfaces entirely.

This is not a future concern. It is happening now. The first companies to lose market share to GEO failures will not understand what happened because the traffic simply stops arriving.

Tier 2: The Architectural Backbone

The Systems Layer is where most enterprise AI strategies fail. Not because the technology is unavailable, but because organizations underestimate the integration challenge.

Moving beyond brittle, point-to-point integrations requires a universal standard for tool and data access. The industry is coalescing around the Model Context Protocol (MCP), pioneered by Anthropic. MCP replaces custom-coded connectors with a standardized interface that gives any model access to any tool.

The value proposition is straightforward: instead of building a custom integration for every tool-model combination, you build one MCP server per tool. Every model speaks the same protocol. This is the "USB standard" moment for enterprise AI.

MCP: Capabilities and Constraints

MCP enables a "One Model, Many Tools" architecture, but strategic leaders must understand its current limitations before committing infrastructure budgets.

MCP relies on JSON-RPC, a stateful and relatively complex format. This works well for structured tool calls but introduces overhead in high-velocity scenarios. More significantly, MCP currently lacks support for delta-style streaming (incremental updates) and shared memory across distributed servers. These constraints matter when you are running multi-agent workflows that require real-time coordination.

The practical implication: MCP is production-ready for single-agent orchestration scenarios today. For multi-agent architectures, organizations should plan for protocol evolution and design systems that can absorb future MCP capabilities without full re-architecture.

Infrastructure Reality: The Hybrid Cloud Imperative

The Systems Layer also forces a reckoning with data sovereignty. Consider how Hindustan Petroleum Corporation Limited (HPCL) approaches this challenge with a Tier-4 green enterprise data center supporting a hybrid cloud model.

On-Premise Sovereignty. HPCL deploys models like LLaMA locally to process sensitive finance and recruitment data. No metadata or proprietary context leaves the internal network. This is not paranoia. It is regulatory compliance in sectors where data exfiltration carries criminal liability.

Public Cloud Utility. Specialized APIs and public-facing workloads shift to the public cloud to maximize accessibility and minimize internal compute overhead. The economic logic is clear: run sensitive workloads internally, run commodity workloads externally.

Zero Trust Architecture. HPCL treats every agent as an untrusted entity. Every API request is independently verified. Circuit breakers maintain runtime integrity and prevent what security researchers call "Mal-action," the execution of harmful operations by compromised agents.

This hybrid approach is not optional for enterprises in regulated industries. It is the minimum viable infrastructure for autonomous AI.

Tier 3: The Agency Layer

This is where the transformation becomes real. At Tier 3, AI shifts from a passive resource to a "Smart Digital Coworker" that operates business processes autonomously.

The technical requirement is clear: agents need to talk to each other. MCP gives a single agent access to tools. But autonomous business processes require multiple specialized agents collaborating in real time. A procurement agent, a compliance agent, and a finance agent must coordinate a purchase order without human mediation.

This peer-to-peer collaboration requires a different protocol. The Agent-to-Agent (A2A) standard, now governed by the Linux Foundation, enables exactly this. Where MCP connects one model to many tools, A2A connects many agents to each other.

MCP vs. A2A: A Strategic Comparison

Understanding the distinction between these protocols is essential for architectural planning.

| Dimension | MCP | A2A |

|---|---|---|

| Primary Focus | One Model, Many Tools | Many Agents, Peer-to-Peer |

| Objective | Enriching a single model's context | Interoperability between independent agents |

| Technical Format | JSON-RPC (complex, stateful) | REST/Standard HTTP (simple, standard) |

| Network Impact | Requires specialized runtimes | Integrates with existing firewalls and infrastructure |

| Maturity | Production-ready for single-agent use | Rapidly evolving, enterprise adoption accelerating |

The strategic decision is not "MCP or A2A." It is "MCP first, A2A second." Build the tool backbone (Tier 2), then layer agent collaboration on top (Tier 3). Organizations that attempt multi-agent architectures without a solid MCP foundation will produce agents that are autonomous but uninformed.

The Human-AI Leadership Challenge

Autonomy does not eliminate leadership. It transforms it. HPCL's approach through initiatives like HP Yuva (front-line leadership interventions) and Samavesh (perpetual learning for new officers) demonstrates a critical insight: leadership in the agentic enterprise is a multi-level phenomenon.

Front-line managers must learn to supervise agent workflows rather than individual contributors. Middle management must design the orchestration patterns that determine which agents collaborate on which processes. Senior leadership must set the strategic boundaries within which agents operate autonomously.

The organizations that treat autonomous AI as a technology deployment rather than a leadership transformation will fail. The technology works. The humans are the bottleneck.

Securing the Agentic Frontier: The TRAPS Framework

Autonomous agents that can execute real-world actions introduce security risks that traditional cybersecurity frameworks were never designed to address. Content filtering protects against inappropriate text generation. It does nothing to prevent an agent from executing a malicious database query or escalating its own privileges.

The TRAPS framework (Trusted, Responsible, Auditable, Private, Secure) provides the governance structure for agentic security.

Three Critical Vulnerabilities

Prompt Injection in Execution Loops. An adversary manipulates an agent's input to force execution of SQL commands or altered API payloads. This is not a content safety issue. It functions as Remote Code Execution (RCE), potentially compromising the entire enterprise database. Every agent with tool access is an attack surface.

Cross-Agent Privilege Escalation. A low-privilege agent (a scheduler, for example) tricks a high-privilege agent (a database administrator) into performing unauthorized operations. This represents a complete subversion of Role-Based Access Control (RBAC). In regulated industries, the compliance implications are catastrophic.

Memory Poisoning. Attackers contaminate an agent's persistent memory through external data sources like malicious emails or compromised documents. The agent then unknowingly exfiltrates sensitive context from previous sessions to unauthorized actors. The attack is invisible because the agent believes it is operating normally.

Traditional cybersecurity assumes the threat is external. Agentic security must assume the agent itself could become the threat vector.

These vulnerabilities demand a security posture that treats every agent as an untrusted entity, regardless of its internal origin. Authorization boundaries, tool-use verification, and continuous behavioral monitoring are not optional additions. They are foundational requirements for any organization deploying autonomous agents in production.

The Rise of Agentic Commerce

The final frontier of the agentic enterprise is commerce itself. The emergence of agent-to-agent commercial protocols is collapsing the traditional e-commerce funnel into a single conversational transaction.

ACP-Commerce (backed by OpenAI and Stripe) enables "Chat-to-Buy" interactions where agents handle the entire purchase handshake. Tax calculation, tokenized credential presentation, checkout coordination. All within the conversational interface. No product page. No shopping cart. No checkout flow. Just a conversation that ends with a confirmed transaction.

Universal Commerce Protocol (UCP) (backed by Google and Shopify) takes a different approach, optimizing for surface-agnostic discovery. UCP ensures that autonomous agents can find, reason about, and recommend products regardless of the surface they operate on.

The Dual-Protocol Imperative

Enterprises cannot choose one protocol over the other. The strategic requirement is a dual-protocol approach.

| Dimension | ACP-Commerce (OpenAI/Stripe) | UCP (Google/Shopify) |

|---|---|---|

| Strategic Goal | Conversational execution | Surface-agnostic discovery |

| Optimizes For | Conversion (high-intent transactions) | Discovery (getting agents to find your products) |

| Primary Channel | Chat interfaces and AI assistants | Search, apps, and multi-surface AI |

Use UCP to ensure autonomous agents discover your products. Use ACP-Commerce to convert that discovery into revenue. Organizations that optimize for only one side of this equation will either be found but never purchased from, or purchasable but never discovered.

This is the new e-commerce stack. It bears almost no resemblance to the website-centric model that has dominated for two decades.

Applying the KDA Framework

The Know-Decide-Act framework provides the operational structure for executing this transformation.

Know. Conduct a comprehensive audit of your current AI architecture. Map every tool, model, and automation against the three-tier framework. Where does your organization sit today? Most will discover they have invested heavily in Tier 1 (interaction) with fragmented attempts at Tier 2 (systems) and virtually nothing at Tier 3 (agency). This honest assessment is the prerequisite for strategic planning.

Decide. Choose your architectural path. Will you build MCP servers internally or adopt managed platforms? Will you pursue a hybrid cloud model for data sovereignty or accept public cloud risk for speed? Will you invest in GEO capabilities now or wait until traffic loss forces the issue? These are irreversible architectural commitments that compound over time.

Act. Execute in 90-day cycles. Start with one high-value workflow that spans all three tiers. A procurement process. A compliance review. A customer onboarding flow. Prove the full-stack architecture works on a single use case before scaling horizontally. The organizations that try to transform everything simultaneously will transform nothing.

The enterprises that will dominate the 2027 landscape are building their Agentic Value Chain today. Not experimenting with chatbots. Building operating systems.

The Strategic Imperative

The window for architectural decisions is narrowing. Organizations that remain at the Interaction Layer will experience stagnant ROI and fragmented data silos. Those that build through the Systems Layer to the Agency Layer will achieve something unprecedented: a business that operates itself, with humans providing strategic direction rather than operational labor.

This is not science fiction. The protocols exist. The infrastructure patterns are proven. The security frameworks are defined. The only remaining variable is leadership conviction.

The question is not whether your enterprise will become agentic. The question is whether you will architect the transition or have it imposed on you by competitors who moved first.

Explore the Navigating the Agentic Enterprise program to build your organization's three-tier agentic architecture, or start with The Augmented Executive session to see autonomous agents in action.

Want to put this into practice?

Keith works with leadership teams across Asia and globally to turn AI insight into action. Reach out directly — response within 24 hours.