Generative AI has moved beyond the experimental periphery to become the fundamental architect of modern financial services. Credit decisions, algorithmic trading, and infrastructure optimization now run on AI-driven systems that define the competitive baseline. This shift creates a critical vulnerability: the gap between technological capacity and regulatory readiness threatens institutional trust at a global scale.

For senior decision-makers, bridging this gap is not a compliance exercise. It is a strategic necessity. The pace of innovation cannot outstrip the governance required to maintain market integrity. The Bretton Woods Committee has identified this as the defining challenge of the current era in global finance.

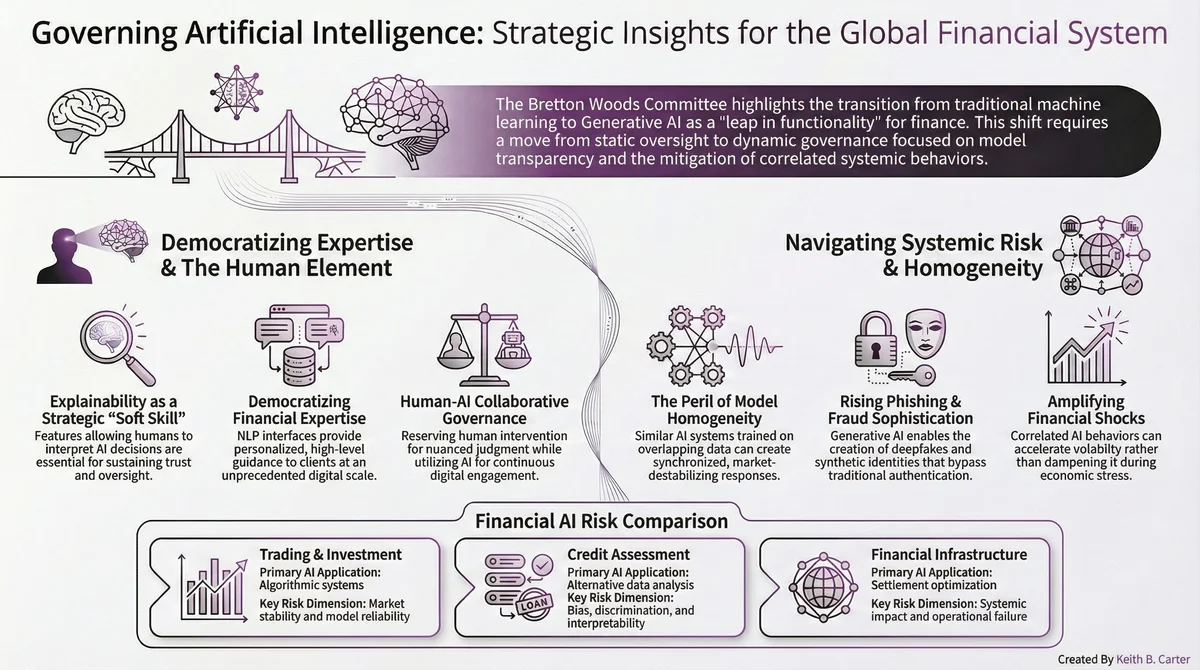

The Generative Evolution: A New Financial Architecture

Traditional machine learning and generative AI represent fundamentally different operational paradigms. Traditional models are reactive. They rely on structured data to forecast known variables. Generative AI is autonomous and creative, producing original outputs across text, code, images, and financial instruments.

The differences are structural:

- Core function: Traditional ML detects patterns and forecasts. Generative AI creates novel content and decisions.

- Output type: Traditional ML produces predictive outputs from structured data. Generative AI produces autonomous, original outputs.

- Data requirements: Traditional ML relies on predefined features and structured datasets. Generative AI trains on massive, diverse datasets with minimal feature engineering.

- Task adaptability: Traditional ML requires domain-specific customization. Generative AI generalizes across tasks with minimal reconfiguration.

The leap from pattern detection to autonomous creation fundamentally changes what financial institutions must govern. The strategist's role shifts from auditing static code to auditing dynamic outcomes.

This evolution traces back to Alan Turing's 1948 inquiry into machines that learn from experience. Modern AI utilizes self-programming neural networks that learn by example and effectively write their own logic. Because these systems program themselves, human oversight must shift toward interpretable systems that survive the scrutiny of a "black box" environment.

The KDA framework applies directly here. Financial leaders must Know the technical capabilities and limitations of generative AI, Decide on governance architectures that match their risk profile, and Act on implementation with measurable oversight mechanisms.

Explainability: The Strategic Soft Skill of Financial Governance

In a landscape dominated by complex neural networks, explainability has graduated from a technical requirement to a critical governance competency. The ability to interpret and communicate the rationale behind a model's output is a strategic prerequisite for maintaining fiduciary duty and market trust.

Effective governance requires deconstructing model behavior across three distinct levels.

Local Explainability

This focuses on the rationale behind an individual decision. In high-stakes contexts, this means providing the specific legal and financial justification for a credit denial to satisfy consumer protection mandates. Every affected customer deserves a clear answer, and every regulator expects one.

Cohort Explainability

This analyzes performance across defined groups to detect disparate outcomes. A loan-pricing model must be tested to ensure it does not produce biased results for specific demographic segments. The consequences of failure here are both legal and reputational.

Global Explainability

This evaluates which features most significantly influence a model's overall behavior. For algorithmic trading strategies, this means determining whether geopolitical events, interest rate shifts, or other market signals drive portfolio-level decisions.

Model transparency is now a primary competitive differentiator. Improved interpretability directly correlates with a firm's ability to navigate the legal complexities of capital markets.

By implementing feature importance analysis and sensitivity testing, institutions can proactively mitigate model error and hidden biases. This transparency is also the essential foundation for democratizing sophisticated financial guidance to a broader market.

Democratizing Expertise: Personalization at Scale

The integration of Natural Language Processing is fundamentally disrupting the delivery of financial expertise. High-value advice that once required exclusive human intervention is becoming a scalable digital channel. Firms can now provide contextually relevant guidance to the retail market that was previously the sole province of high-net-worth individuals.

This represents a radical shift in the industry's cost-to-serve model. AI analyzes transaction histories and individual objectives in real time, transforming high-touch advisory services into low-marginal-cost digital products. Recommendations become not just tailored but dynamically adaptive to the user's evolving financial life.

In credit assessment, AI-driven models are reducing long-standing information asymmetries through three mechanisms:

- Processing unstructured data: Analyzing alternative sources such as news feeds, regulatory filings, and industry reports provides a more nuanced risk assessment than traditional financial statements alone.

- Reducing asymmetry: AI identifies subtle repayment patterns, allowing lenders to understand borrower intent and capacity with greater precision.

- Expanding market access: These tools enable credit assessments for individuals traditionally excluded by standardized underwriting criteria.

There is a strategic warning embedded in this progress. The same tools that expand access can automate discrimination if not strictly governed. Historical biases embedded in training data will propagate at scale unless institutional leaders implement active monitoring and correction protocols.

The Systemic Risk Profile: Model Homogeneity and Concentration

The interconnectedness of AI-driven markets introduces a novel systemic risk profile. The primary threat is not individual model failure. It is model correlation. When diverse institutions deploy similar AI architectures trained on overlapping datasets, the market loses its "biological diversity" and risks reacting as a single, synchronized entity during a crisis.

The Synchronization Problem

Model homogeneity creates an environment where institutions are "synchronized in their error." During market shocks, correlated models may trigger simultaneous asset sales or liquidity withdrawals, creating a vacuum that turns a routine correction into a collapse. This is not theoretical. Flash crashes have already demonstrated how algorithmic correlation amplifies volatility.

The Feedback Loop Vulnerability

AI models trained on historical data often fail to adapt to new economic regimes such as a pandemic, sudden trade restrictions, or a sovereign debt crisis. Their parallel actions, based on outdated assumptions, can amplify market dislocation rather than absorb it. The feedback loop between model behavior and market reality becomes self-reinforcing in exactly the wrong direction.

Non-Market Systemic Threats

Beyond market volatility, generative AI introduces cybersecurity vulnerabilities that require constant technical vigilance:

- Model inversion attacks: Adversarial attempts to reconstruct sensitive training data by analyzing a model's outputs.

- Automated vulnerability discovery: Malicious use of LLMs to identify software flaws in financial infrastructure faster than traditional scanners.

- Synthetic identity fraud: Fabricated identities designed to bypass Know Your Customer (KYC) protocols at scale.

- Deepfake impersonation: Sophisticated video or voice impersonation of executives used to authorize fraudulent transactions.

Managing these correlated vulnerabilities demands a coordinated, forward-looking oversight framework that monitors model behavior at systemic scale. The Agentic AI for Financial Services program addresses exactly this challenge, equipping leaders with frameworks to detect and manage AI-driven risks across their organizations.

Operational Optimization and Infrastructure Resilience

While consumer-facing AI captures attention, the hidden architecture of finance, including trading, clearing, and settlement, is where AI provides the connective tissue for market integrity. Strategic integration in back-office operations is a prerequisite for operational resilience.

Three industry proof points demonstrate the gains currently available:

- JP Morgan's COiN: Contract Intelligence automates the review of legal documents, completing 360,000 hours of manual labor in seconds while improving compliance accuracy.

- NASDAQ's Dynamic M-ELO: Reinforcement learning optimizes market structure by dynamically adjusting wait times for midpoint extended life orders, improving outcomes for institutional investors.

- Predictive settlement: AI models analyze historical patterns to identify potential settlement failures before they occur, enabling preemptive collateral management.

These capabilities deliver measurable value. They also introduce a critical trade-off between efficiency and fragility. Maximizing resource efficiency can introduce "model drift," a gradual deterioration of performance as real-world data diverges from training data. Without rigorous governance over data lineage and security, even the most advanced AI tools rest on unstable foundations.

Robust data management is the only defense against model drift. The integrity of operational AI depends entirely on the quality of its data governance.

Three Pillars for the Algorithmic Future

The path forward for global finance requires balancing bold innovation with resilient governance. The integrity of the financial system depends as much on algorithmic oversight as it does on capital reserves.

Financial leaders must prioritize three core pillars:

Explainability. AI decisions must remain transparent and human-interpretable. Trust erodes the moment a regulator, customer, or board member cannot understand why a system made a specific choice.

Governance structures. Protocols must manage model risk, data integrity, and legal liability in an environment where AI systems program themselves. Static compliance frameworks are insufficient for dynamic systems.

Systemic risk monitoring. The capability to detect synchronized behaviors and AI-driven threats across interconnected markets must be built into institutional DNA. Waiting for a crisis to reveal model correlation is not a strategy.

Decision-makers who prioritize growth alongside system-wide integrity will harness the transformative power of generative AI while safeguarding the stability of the global economy.

These three pillars map directly to the KDA framework. Know your models and their limitations through explainability. Decide on governance structures that match your risk exposure. Act on systemic monitoring before correlated failures materialize.

The financial sector stands at an inflection point. Generative AI is not a tool to be deployed and forgotten. It is a system to be governed, monitored, and continuously aligned with the integrity standards that global markets demand. The leaders who build this governance capability now will define the next chapter of financial stability.

Ready to build AI governance capability into your financial services organization? Explore the Agentic AI for Financial Services program or the Banking Agentic Revolution executive workshop to get started.

Want to put this into practice?

Keith works with leadership teams across Asia and globally to turn AI insight into action. Reach out directly — response within 24 hours.